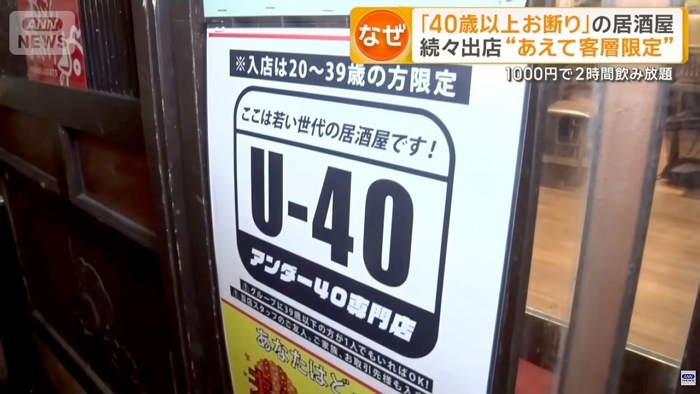

A bank manager in the U.A.E. was conned into authorizing a $35 million transfer after receiving vocal confirmation from the account holder. Only that wasn’t who the manager thought they were…

Early last year, the manager of an undisclosed bank in the United Arab Emirates received a call from a longtime client – the director of a company with whom he had spoken before. The man was excited that his company was about to make an important acquisition, so he needed the bank to authorize a transfer of $35 million as soon as possible. The client added that a lawyer named Martin Zelner had been contracted to handle the acquisition, and the manager could see emails from the lawyer in his inbox. He had spoken to the client before, he recognized his voice, and everything he said checked out. So he proceeded to make the most expensive mistake of his career…

Photo: B_A/Pixabay

The bank manager authorized the bank transfers, confident that everything was legitimate, but in reality, he had just fallen victim to an elaborate, high-tech swindle. The person he had talked to on the phone had used AI-powered “deepvoice” technology to clone the bank client’s voice and make it impossible for anyone to tell it apart from the original.

The mega-heist was revealed in court documents in which the U.A.E. was seeking American investigators’ help in tracking down part of the $35 million ($400,000) that apparently went into U.S.-based accounts held by Centennial Bank.

Not much info has been revealed in this case, apart from the fact that U.A.E. authorities believe that the elaborate scheme involved at least 17 people and that the money was sent to accounts all over the world, to make it difficult to track down and recover. Neither the Dubai Public Prosecution Office nor the American lawyer mentioned by the fraudsters, Martin Zelner, had responded to Forbes‘ request for comment, at the time of this writing.

It’s only the second known case of a bank heist involving deepvoice technology, with the first occurring in 2019, but cybercrime experts warn that this is only the beginning. Both deepfake video and deepvoice technologies have evolved at an astonishing pace, and criminals are bound to take advantage of them.

“Manipulating audio, which is easier to orchestrate than making deep fake videos, is only going to increase in volume and without the education and awareness of this new type of attack vector, along with better authentication methods, more businesses are likely to fall victim to very convincing conversations,” cybersecurity expert Jake Moore said. We are currently on the cusp of malicious actors shifting expertise and resources into using the latest technology to manipulate people who are innocently unaware of the realms of deep fake technology and even their existence.

The thought of a sample of your voice, be it from a YouTube or Facebook video, or from a simple phone call, being used to clone your voice for malicious purposes is sort of terrifying…